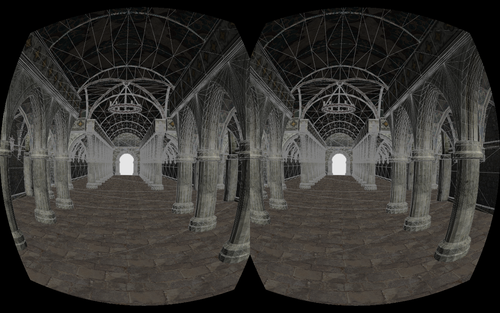

After working on Oculus Rift DK1 support on and off for a couple of weeks, I just managed to get everything up and running. Supporting head tracking was quite easy but – as the Oculus documentation correctly states – switching the rendering back-end required some major changes.

Luckily, I already had the render layout layer in place so rendering two views side-by-side for the same scene/camera was no problem. However, to compensate the rift’s lens distortion, a post-processing shader has to be applied which meant a) switching to FBOs, b) adding a post-processing layer to the renderer and c) getting the distortion parameters to the shader code.

Managing FBOs got particularly complicated as the source FBO (the one the scene is rendered to) has to be multi-sampled and upscaled (the distortion contracts the result so the FBO has to be bigger than your target view). This is then blitted to resolve multi-sampling and then bounced back and forth between two FBOs, once for each post-processing step. Finally, the distortion shader is applied to render the contents to the window’s framebuffer.

What’s weird though is that the field of view of the Oculus Rift DK1 actually does not seem utilize the complete display area so up-scaling does seem a bit pointless. Having to scale by 71% was also way beyond what I had expected, especially since the documentation talked about the performance impact of upscaling with an example of 25% percent.

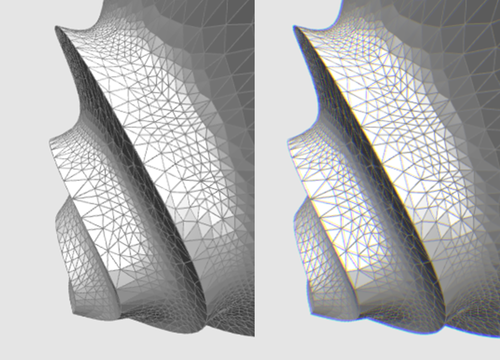

I also hadn’t realized before that the lenses cause a color shift (different colors are bend differently through the lens) which also has to be corrected in the distortion shader. The left shows a screenshot without correction for the color shift and the right one with it. However, this does only seems to reduce the problem, not completely solve it, and requires three texture lookups instead of one.

I would recommend anyone who wants to implement Oculus Rift support to check out the sample code that is part of the SDK. Particularly the shader parameters (scaleIn, scale, etc) contain some tweaks that are non-obvious when simply reading through the PDF. For example, scale does not use the up-scale factor but actually its inverse. The authors apparently decided to use a more efficient fragment shader implementation as opposed to one that is easier to understand. The point about defining model space resolution (i.e. “how many units of OpenGL coordinate system is a meter”) could have been stressed more because it directly affects the effective eye separation (and thus the perception of the 3D effect).

All in all, great device though! I’m looking forward to receiving my DK2 device which should improve graphics quality a lot. Thanks to Erik for lending me his DK1!

Leave a Reply